Social tech debt doesn’t mean what you think it means

Rebuttal to Society’s Tech Debt & Software’s Gutenberg Moment

- Publish Date

- Authors

- Ed Frank

I recently read Society’s Technical Debt and Software’s Gutenberg Moment by Paul Kedrosky and Eric Norlin of SK Ventures on the economics of how AI will impact the software industry. While I agree AI will significantly impact the software engineering labor market, I don’t agree with the finer points nor the methodology underlying their conclusions.

Why listen to me … Well, I’m not some rando responding to everything on the internet. In fact, that’s not my general impulse. But. I’ve been involved in software development for over 30 years and have led software-driven teams as a consultant (in various capacities) for over 25 years. Additionally, I have a bachelor’s and master’s degree in economics and have also been an adjunct professor of economics at an MBA level (off and on) since the early 90s, including a 2-year stint as a visiting professor of economics at the Williams College of Business at Xavier University. Finally, in the very early years of my career, I argued economic costing methodology on behalf of Cincinnati Bell Telephone at the Public Utility Commission of Ohio. And all of that experience helped me spot major flaws in applied economic theory, a topic I’ve worked in for decades.

tl;dr

Economics is one of those topics that you would think would be fairly straightforward to apply, but in reality, it isn’t. Like most things, we simplify or assume things to make them easy to understand. Unfortunately, we forget the underlying details and assumptions when we try to apply them and then often come to the wrong conclusions.

Unlike the authors, I believe software and software developers operate in understandable and fairly efficient marketplaces. I also believe the impact of AI is not as dire for highly-skilled and creative software developers in our domestic market. So, instead of mass layoffs and plummeting salaries, I see higher demand and higher salaries for those developers who leverage AI to help them become more productive. In other words, AI will be a complement to these creative developers (in the economic sense).

Like the authors, I do foresee disruption due to AI, but in highly commoditized work that can be isolated, more easily specified, and lower valued. In other words, AI will be a substitute for commoditized developers (in the economic sense).

In the end, I don’t agree with the premise that we are currently at a societal technical debt with respect to having enough software:

- I’ve seen no proof in the author’s analysis to justify that outcome.

- I don’t know of a single metric that would even prove that to be the case.

- My own personal experience with the ubiquitousness of software invading every aspect of our lives does not support that.

If there is societal technical debt, it exists because of the way we currently build software. The build cycle is often overly complex and wasteful, and the product lifecycle is far too expensive and fragile. My biggest concern is that AI will only exacerbate this problem, not fix it.

Analysis

The authors misinterpret the fundamental economic methodologies of supply and demand, Baumol’s cost disease, and even how the market is defined. This leads them to arrive at a flawed assumption that the software market is unique in its evolution and operation and therefore is broken, causing us to end up with less software than is optimal.

Let’s start by considering an economic model of software supply and demand. Software has a cost, and it has markets of buyers and sellers. Some of those markets are internal to organizations. But the majority of those markets are external, where people buy software in the form of apps, or cloud services, or games, or even embedded in other objects that range from Ring doorbells to endoscopic cameras for cancer detection. All of these things are software in a few of its myriad forms.

The first error is to assume software is a definable market. Software is much like the pieces of a chair from Ikea: it’s useless until assembled, and then it has little value (what economists call utility) until used to sit. Software can be used as part of the production function for a company or can be used as a substitute for a storefront (i.e., marketplace) or can be a consumer product. In other words, software is not a single market but exists in multiple markets and in various forms that impact both the consumption and supply of goods and services. So the reductive proposition that we should consider all software as if it were a single set of supply and demand curves is unrealistic.

But technology has a habit of confounding economics. When it comes to technology, how do we know those supply and demand lines are right? The answer is that we don’t. And that’s where interesting things start happening.

Sometimes, for example, an increased supply of something leads to more demand, shifting the curves around.

In Introductory Economics, we would say shifts in supply cause the quantity of demand to shift, but changes in population, tastes and preferences, income, and related goods cause the demand curve to shift … It’s not that mysterious.

For example, the more utility gained from the software solution, the more its demand curve shifts out. The cross-price elasticity of devices—on which software solutions are used—also has a direct impact on the demand curve for software solutions. In other words, as smartphones continue to become more prevalent and more powerful, the demand for smartphone software increases.

The rest of the economy doesn’t work this way, nor have historical economies. Things don’t just tumble down walls of improved price while vastly improving performance. While many markets have economies of scale, there hasn’t been anything in economic history like the collapse in, say, CPU costs, while the performance increased by a factor of a million or more.

To make this more palpable, consider that if cars had improved at the pace computers have, a modern car would:

- Have more than 600 million horsepower

- Go from 0-60 in less than a hundredth of a second

- Get around a million miles per gallon

- Cost less than $5,000

This is classic recency bias, and tech is the most recent example of this phenomenon. Think about the age of steam, the introduction of electricity, or internal combustion engines. They all had both producer and consumer impacts similar to tech.

We can see similar patterns in rocketry where Robert Goddard’s first rocket, which flew at 60 MPH in 1926 and only 12 years later flew at 3,641 miles per hour. Just under 30 years after that, the Saturn V rocket hit 25,000 miles per hour! From an economic perspective, the Delta E rocket cost $81,000 per pound to put satellites in orbit, whereas the Falcon 9 only costs about $1,200 per pound. While I admit the rate of change isn’t anywhere near that of CPU costs and performance, I would point out that CPU and storage enjoy both production advantages (miniaturization down to the atomic level is an easier problem to solve) and the unique economies of scale computer chips can take advantage of.

By that, we don’t literally mean “software” will see price declines, as if there will be an AI-induced price war in word processors like Microsoft Word, or in AWS microservices. That is linear and extrapolative thinking. Having said that, we do think the current frenzy to inject AI into every app or service sold on earth will spark more competition, not less. It will do this by raising software costs (every AI API call is money in someone’s coffers), while providing no real differentiation, given most vendors will be relying on the same providers of those AI API calls.

I think this is a stretch. AI is inexpensive to create and the only differentiator is the scale and variety of the data used to train it. So, if the margin is there, economics will tell you that investors will flock to it to get their piece of the supra-economic profits being generated which would cause the cost per API call to fall.

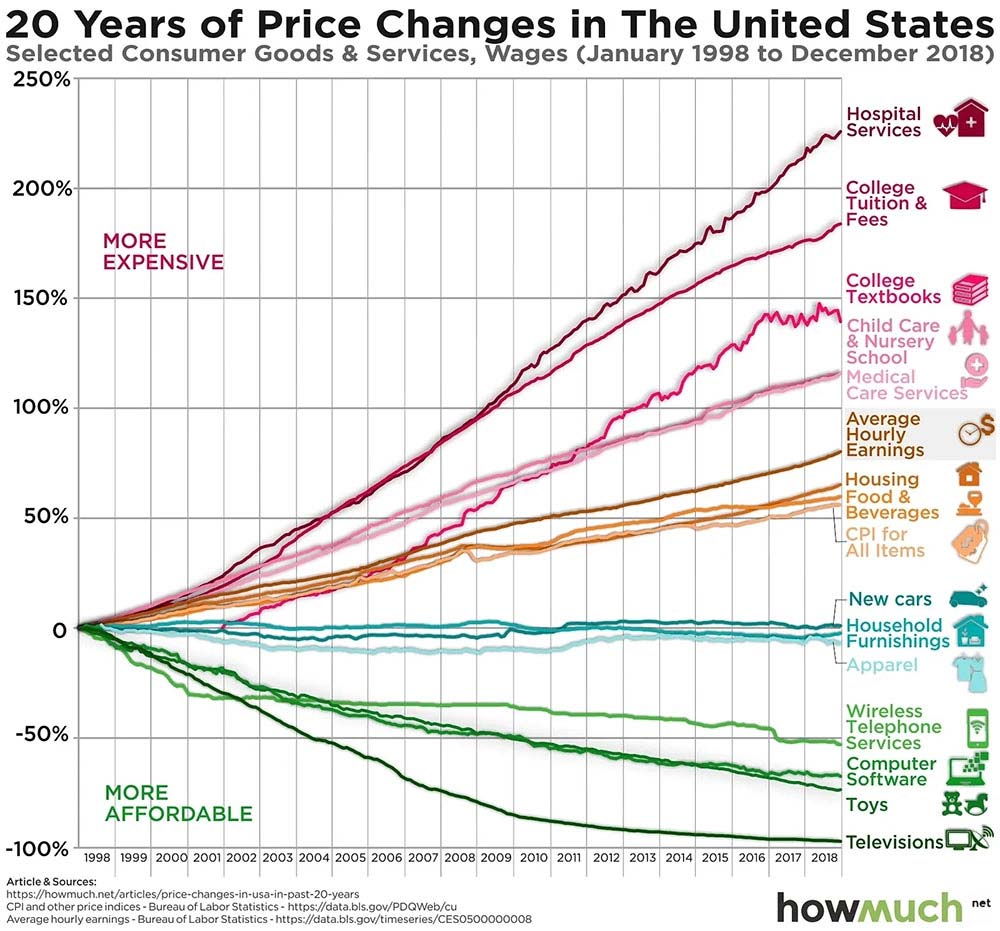

Economist William Baumol is usually credited with this insight, and for that it is called Baumol’s cost disease. You can see the cost disease in the following figure, where various products and services (spoiler: mostly in high-touch, low-productivity sectors) have become much more expensive in the U.S., while others (non-spoiler: mostly technology-based) have become cheaper. This should all make sense now, given the explosive improvements in technology compared to everything else. Indeed, it is almost a mathematical requirement.

This chart that nearly everyone uses to explain Baumol’s Cost Disease is used incorrectly. Note that they use the price of final goods and services, not the labor costs to produce them. The classic definition for Baumol’s cost disease is: the rise of wages in jobs that have experienced little or no increase in labor productivity, in response to rising salaries in other jobs that have experienced higher productivity growth.

So if you look at college tuition and fees, you would expect a dramatic increase in the cost of labor (faculty), but instead, a recent study shows that across all full-time college faculty (private and public) salaries have increased by an inflation-adjusted 9.5% … over 50 years (less than 0.2% per year). This doesn’t really reflect Baumol’s cost disease at all. What we are looking at is more reflective of inefficient markets for end goods and services due to financial, regulatory, or other barriers to entry.

Meanwhile, software is chugging along, producing the same thing in ways that mostly wouldn’t seem vastly different to developers doing the same things decades ago. Yes, there have been developments in the production and deployment of software, but it is still, at the end of the day, hands pounding out code on keyboards. This should seem familiar, and we shouldn’t be surprised that software salaries stay high and go higher, despite the relative lack of productivity. It is Baumol’s cost disease in a narrow, two-sector economy of tech itself.

These high salaries play directly into high software production costs, as well as limiting the amount of software produced, given factor production costs and those pesky supply curves.

The idea that U.S. software engineer wages are significantly higher than other industries may be true, but not in all cases.

In a Code Summit study, we can see that related software wages have increased unevenly from 2001 to 2019.

In a Harvard Business Review Study, we can see that while IT wages have increased, they remain lower than other non-IT STEM professions.

What’s more interesting is that during this same time frame (2000 - 2018) inflation increased by about 46%, meaning in real terms the average IT job wages increased annually by about 0 - 2% per year.

So the relative flatness of real wages in the software industry is highly reflective of the marginal increase in the productivity of creating code because the way in which software is built has not changed all that much over time.

Another misinterpretation is that the authors only look at only U.S. wages in the development of software. This is not accurate as most companies offshore some or all of this work to optimize the marginal cost of development to the marginal revenue of the thing they are developing.

Those of us who have led or participated in distributed development (onshore and offshore) understand there is a coordination cost to offshore work, but that is typically offset by significantly lower wages. We often reduce the complexity and creativity of the required work to try to overcome some of these coordination costs. Asynchronous communication is great, but the longer it takes to get a response, the harder it is to collaborate.

In other words, low value-added code development (well-defined, isolatable, and repetitive and/or simplistic components of work) is transferred to the lowest cost delivery approach to match the value derived to the cost of production.

The more creative and iterative work is done in shared time zones, common languages, and shared cultural understandings to optimize the work. Why? This work is higher-value, typically ill-defined, and often novel first-of-a-kind work. Complex, high-value work is what the best software engineers and developers are great at solving. This arrangement allows software engineers and developers to work in real-time, interactively, and iteratively with their business counterparts to hone in on value-driven business outcomes delivered at higher speed.

And, while markets have clearing prices, where supply and demand meet up, we still know that when wages stay higher than comparable positions in other sectors, less of the goods gets produced than is societally desirable. In this case, that underproduced good is…software. We end up with a kind of societal technical debt, where far less is produced than is socially desirable—we don’t know how much less, but it is likely a very large number and an explanation for why software hasn’t eaten much of the world yet. And because it has always been the case, no-one notices.

The first half of this statement isn’t true at all. Software engineers are not necessarily paid at a higher wage than comparable positions, nor are those wages artificially constrained in any way. Wages are defined by supply and demand and are upper bound by the value they are able to provide at that wage rate. In other words, firms are only willing to pay a wage that results in the output costs (including its opportunity cost) being equal to or less than the price at which they can sell it. If wages are too high, businesses will substitute cheaper labor where they can and/or capital (i.e., technology) for some of the labor to make the remaining labor more productive.

In terms of what is the correct socially desirable amount of software, the answer is that as long as markets are efficient (using another concept from Baumol called contestable markets), the results will be optimal. So far, I haven’t seen any evidence of inefficiency in the labor market.

Finally, and in my opinion, software is nearly ubiquitous. I can remember a time when ML stood for Machine Language, and I was one of the first in my company (1988) to use tools like Harvard Graphics, Lotus 123, and WordPerfect. (We didn’t have an intranet at the time, and the internet was still 5 years from being publicly available) At the time, the majority of people in my department still did cost estimates by hand on paper spreadsheets that were double-checked by admins. So, I am not sure what software isn’t being built that should be. But I am also not sure that the software that is being built is created efficiently—regardless of how hard people are trying.

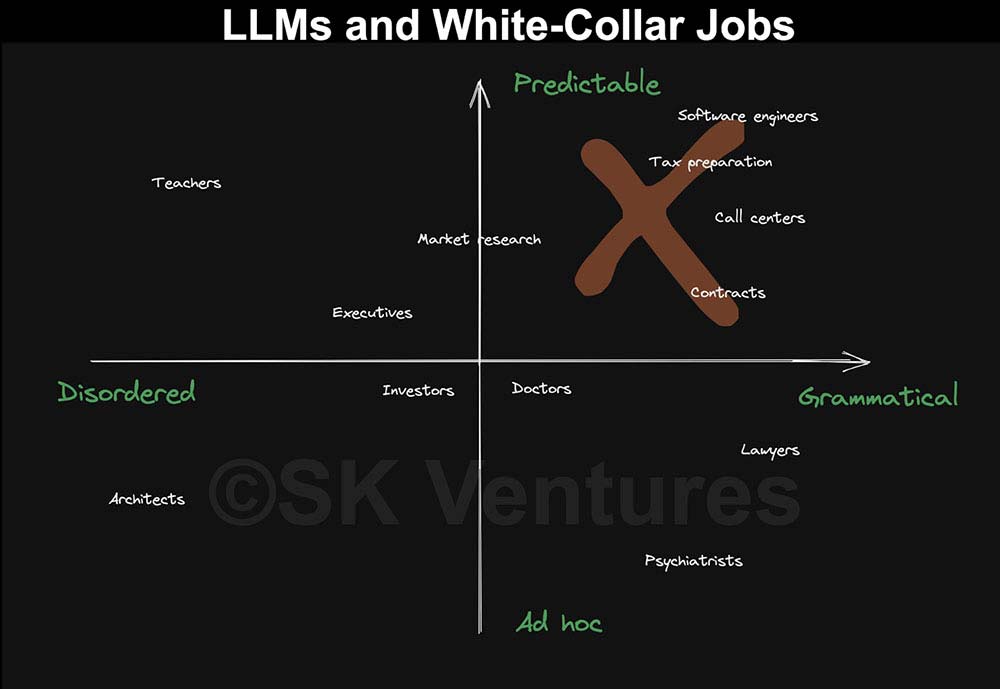

As the following figure shows, Large Language Model (LLM) impacts in the job market can be thought of as a 2x2 matrix. Along one axis we have how grammatical the domain is, by which we mean how rules-based are the processes governing how symbols are manipulated. Essays, for example, have rules (ask any irritated English teacher), so chat AIs based on LLMs can be trained to produce surprisingly good essays. Tax providers, contracts, and many other fields are in this box too.

The resulting labor disruption is likely to be immense, verging on unprecedented, in that top-right quadrant in the coming years. We could see millions of jobs replaced across a swath of occupations, and see it happen faster than in any previous wave of automation. The implications will be myriad for sectors, for tax revenues, and even for societal stability in regions or countries heavily reliant on some of those most-affected job classes. These broad and potentially destabilizing impacts should not be underestimated, and are very important.

This is a scarcity or fixed pie version of the world, and one I reject. We have seen wave after wave of supposed jobs replacing technology. Yet people still find ways to take advantage of technology to simplify the mundane parts of their jobs as they transition into higher-value and more creative pursuits that technology (including AI) will always have a hard time replicating.

The primary disruption AI will have is on lower-value work that can be done more easily now offshore. How do I know that to be true? Look at the ways in which markets have already optimized lower-value activities by offshoring the work: IT captives and developers, offshore call centers, software-driven tax prep, offshore market research groups, and even offshore X-Ray reading and interpretation. These are all rules-based, repetitive, and low-creative work.

In economic terms, AI is a complement to creatives and a substitute for commoditized work.

Now, let’s be clear. Can you say MAKE ME MICROSOFT WORD BUT BETTER, or SOLVE THIS CLASSIC COMPSCI ALGORITHM IN A NOVEL WAY? No, you can’t, which will cause many to dismiss these technologies as toys. And they are toys in an important sense. They are “toys” in that they are able to produce snippets of code for real people, especially non-coders, that one incredibly small group would have thought trivial, and another immense group would have thought impossible. That. Changes. Everything.

I agree with this to a point. In many ways, it’s like photography, which is a hobby of mine. As cell phones started to include really great cameras, SLRs and even Digital SLRs started to decline in use … but the number of photos people took grew dramatically. However, just because more people can take a lot more pictures and software can digitally correct it doesn’t mean it will be composed in a way that people will find interesting or beautiful … you still need artists for that. This change will shift some work but also cause the best and most creative software engineers to be in more demand.

AI will not be able to create the next great application—creative, talented humans still need to do it, and those artists are still very rare. AI may allow those who see the elegant solution to build it faster and more efficiently. This is likely to lead to the merging of software product owner, architect, and engineer to create the next AI-facilitated killer app. As these apps are developed faster and evolve more quickly, they will have more utility-driving value and increase the need for more of these multi-skilled individuals.

The other interesting outcome of this is that the productivity of this new generation of software creators will have much higher productivity, meaning they can (and will) demand more money for their services.

We have mentioned this technical debt a few times now, and it is worth emphasizing. We have almost certainly been producing far less software than we need. The size of this technical debt is not knowable, but it cannot be small, so subsequent growth may be geometric. This would mean that as the cost of software drops to an approximate zero, the creation of software predictably explodes in ways that have barely been previously imagined.

I’m not sure how we arrive at social technical debt based on the analysis in the authors’ article. But I can imagine that the amount of software produced is not optimal—though not because markets are imperfect. Instead, the amount of software produced is suboptimal because most companies are very inefficient at developing software. I don’t think John Carmack’s experience at Meta is unique to software development. Most software development organizations are inefficient and, in some cases, significantly inefficient. This result isn’t because of languages or tools. Rather it’s because of people and processes.

Anyone in the software business for any length of time knows how challenging an environment it is to get things done efficiently:

- Perverse incentives to build software quickly versus building it correctly to meet external timelines

- Focus on more PRs / velocity / story points instead of speed to value

- Incorrect financial models focusing on funding short-term projects instead of long-lasting product teams

- Poor visibility into understanding the true cost of technical debt, resulting growing the codebase on already overtly complex and monolithic software

- Far too large software teams with extremely high coordination costs that are often focused on the wrong things

- Poor development environments reducing the ease and speed of iterating on code

- Disconnects between business and technical teams creating even higher coordination costs

- Misuse or misunderstanding of agile development

- Excess hiring (hiring for peak utilization, defensive hiring, or vanity hires)

- Etc.

My biggest concern isn’t that AI will transform software and destroy the job market for software engineers but rather that the law of unintended consequences may lead us to build inefficient code ridiculously faster. The flip side of that is the opportunity for highly-skilled software engineers to become even more creative artists by experimenting with how AI tools can make them more efficient and more effective, with higher productivity and greater demand.

Ed Frank

- Status

- Double Agent

- Code Name

- Agent 00141

- Location

- Castle Rock, CO